Whether people can work successfully with these new technologies depends on a number of factors. “In my experience, the key is how competent and empowered someone feels,” says Grote. For example, those who are less well educated are more likely to worry that their job may be at risk. Equally important is how the company communicates its future technological path. “Employees need a clear idea of how they can adapt and how their employer is going to support them on that journey,” says Grote.

Ideally, this process would also address the question of which tasks we regard as fulfilling. Hofmann cites the example of language models that are optimised for programming: “A piece of code that might take me ten hours to write perfectly can be generated by the models in a fraction of a second,” he says. That frees up valuable time for other activities. “But if someone used to enjoy spending a full day programming, they’re not going to be very happy about that development,” he says.

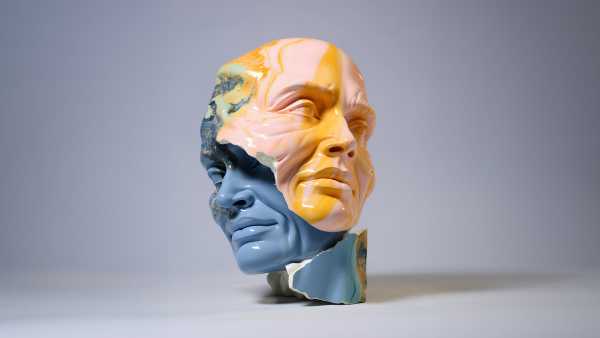

From programming to ChatGPT, language models are making inroads into many areas of society. Grote argues that human language is something quite special. “Spoken language is what makes us unique,” she says. “Language is a creative process that expresses thoughts through words.” This is the human ability that language models are currently attempting to emulate.

It makes a big difference whether a text has been written by AI or by a human, according to Hofmann: “Whenever we use language, we’re also expressing our feelings and experiences. AI doesn’t have recourse to that experience, however well written its text may be." During his studies, he also became interested in philosophy and now wonders whether intelligence and being necessarily need to be tied to a biological substrate. Ultimately, he says, it’s a question of where we draw the line between artificial intelligence and being human.